I recently led a webinar for the Sensu community on how to scale your monitoring by setting up a three-node cluster in Sensu Go using Sensu’s embedded etcd. Clustering improves Sensu’s availability, allows for node failure, and distributes network load.

In this post, I’ll recap the webinar and provide demos on how to set up, back up, and restore your etcd cluster, including best practices for success.

Etcd cluster members reaching consensus.

Etcd cluster members reaching consensus.

Etcd clustering fundamentals

Let’s begin with a few important etcd clustering fundamentals. At its core, etcd is an algorithm that lets you store data and provide consistent state across nodes. Keep in mind the following:

- More than 50% of the nodes need to agree on state: For example, a three-member cluster can tolerate one failed member before it gets into an irreparable state. A five-member cluster can tolerate two failed members.

- The cluster is backed by persistent on-disk storage: Each member has a record of its state stored in a database-like structure on disk.

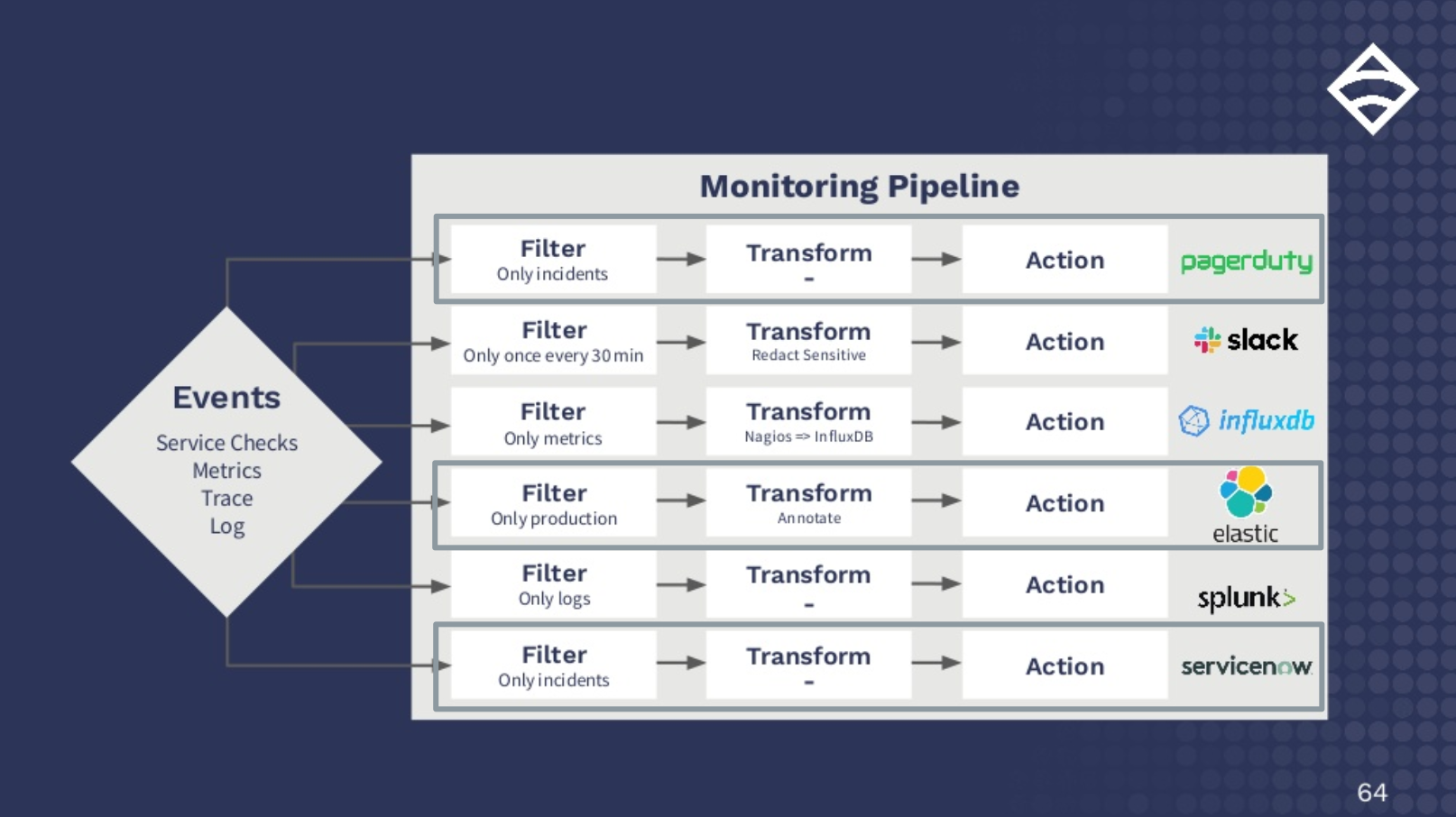

- The Sensu Go backend (by default) uses embedded etcd for both configuration and event processing: This allows Sensu to process and handle event pipelines.

- The Sensu Go backend (by default) acts as both an etcd server and client: You can configure Sensu Go to act as a client only and communicate with other Sensu Go backend etcds, or with an external etcd that you have in your infrastructure elsewhere.

Creating a Sensu Go cluster using embedded etcd: Implementation choices and configuration

Before setting up your etcd cluster, you’ll need to take care of these pre-flight actions:

-

Prepare your DNS configuration (if desired): Consider in advance if you want to use resolved hostnames instead of pure IP addresses. (For the demos here, I’ve prepared a host file instead of dealing with a DNS server.)

-

Prepare your TLS assets (if desired): If you want secure endpoints, this is the most important step to take upfront, as you can’t easily mix and match or switch between secure and insecure peer-and-client communications in the cluster. (In the following demos, I’m just showing the basics, so I won’t have secure endpoints. However, Sensu does provide support for establishing secure connections.)

-

Prepare firewall/security groups to allow the members to talk to each other.

-

Back up existing Sensu resources using the

sensuctl dumpcommand. In the demo, I’m building a cluster from scratch with no pre-existing resources. If instead you want to scale an existing single node cluster, skip steps 5 and 6 and jump straight to the section below on scaling your cluster. -

Stop sensu-backend service on the nodes that you’re converting to a multi-node cluster.

-

Ensure the sensu-backend datastore directory is empty (

/etc/sensu/backend.yml): By default, Sensu Go will start up in a single node cluster and populate an etcd datastore for you, so you need to clean that out before you configure it into a multi-node cluster. -

Decide on the size of the initial cluster: Remember that more than 50% of the nodes have to agree on state. I’d recommend starting with a three-node cluster configuration, which requires two nodes to be up before the cluster will be in a consistent state.

-

Give each Sensu backend a unique etcd node name: In order to successfully scale, back up, and restore your cluster, each node needs its own name. I use simple identifiers like 01, 02, and 03.

-

Choose an etcd token for your cluster: This ensures that each of your etcd clusters in your network has its own specification.

Demos

The following demos assume you’ve set up and installed the sensu-backend on all the nodes used in your cluster. See the Install Sensu guide if you haven’t done this yet.

Setting up the first member of a three-node cluster

In the first demo, I show you how to set up your cluster, including important steps for configuring peer and client connections:

Setting up the second member of the cluster

We’ve set up the first member of the cluster, but since this is a three-member cluster, we need to have at least one more node up and running before the cluster is operational. In the demo below, I set up the second cluster and show you how to use sensuctl to get cluster health status:

Setting up the last member of the cluster

Now, we can spin up the last member of the cluster and again use sensuctl to monitor the cluster:

Failing a member of the cluster

Let’s see what it looks like to fail one of the members:

Those are the basics of setting up the cluster. Now we can take a look at how to scale the cluster.

Scaling your cluster

To scale your cluster, you’ll want to know how to add a backend to the cluster, remove a backend from the cluster, or add a Sensu backend as an etcd client.

In this demo, I remove a server from the cluster and replace it with a different server:

Here I remove a cluster member:

Next, let’s add the Sensu backend as an etcd client, not as an etcd node member. Typically, you would use sensu-backend as an etcd client like this when using an external etcd service, but you may find this useful even when using embedded etcd. The system resources needed by the etcd quorum algorithm increases as you add cluster members, and you start to see diminishing returns in responsiveness beyond 5 or 7 node members. So you may benefit by mixing in some client only Sensu backends with the cluster members.

Sensu Go backup and restore

Let’s talk about preparing for cluster calamity in Sensu Go. The prior generation of Sensu configuration was a set of files in the system, whereas Sensu Go utilizes stateful configuration stored in the etcd data store. The benefit to the new approach is in the amount of effort needed to keep a cluster of sensu-backend in sync even as you make changes to your monitoring. The single point of truth for the state of your monitoring is in the etcd data store, but you can still find yourself in situations where multiple cluster members fail and etcd can’t reach a consensus with regard to what that shared state is. One way to prepare for that is to make sure you have regular backups of the live Sensu resource state that can be used to restore the cluster from scratch.

You have a couple of options when it comes to backing up and restoring your cluster:

- Sensu specific:

sensuctl dumpandsensuctl create - Etcd native: snapshotting and restore (I’m not covering this option in the demo; however, you can learn more about it in the etcd documentation on disaster recovery.)

Here I show you how to use the sensuctl dump command to export part, or all, of your configuration:

Note: As a security feature of Sensu Go, passwords and secrets are not included when exporting users. We recommend that you use an external SSO and secrets provider so that you can keep all of your monitoring information in your team’s public repo without being concerned about leaking sensitive information.

Sensu cluster best practices

We’ve reached the end of the demos! Before we wrap up, I want to leave you with a list of best practices for cluster success:

- Keep peer cluster members close, federate clusters globally: Connect all of your clusters so you can utilize a single login when working with your monitoring configurations.

- Use persistent volumes for your etcd datastore when using containerized cluster members: This adds flexibility and allows you to bring up members quickly without having to rebuild a datastore.

- Use a load balancer for agent and API client connections to evenly distribute connections across the cluster.

- Use an odd number of etcd cluster members: The recommended cluster size is three, five, or seven, as an odd number gives you a higher tolerance for failure (etcd consensus algorithm requires greater than 50%). If you need more than seven members, use backend clients to extend event processing if needed. (See best practice #6.)

- Use external secrets and SSO authentication providers to simplify backup and restore processes.

- Scale event processing with backend clients. The etcd quorum algorithm requires some communication between node members to sync state, so as the number of nodes grow the amount of resources needed to reach quorum also grows, so there is a tradeoff between redundancy and resource requirements.

- Scale further with Postgres event store: Once you’ve gone as far as you can with scaling via Sensu backend clients, you can take the event processing out of etcd entirely and move it into a Postgres event store. It’s a bit more complicated but it gets you an order of magnitude without having to use a large set of federated clusters. You can read more about this in Sean’s blog post about performance tuning Sensu Go

Additional reading

There you have it: All of the steps and best practices you need to scale your monitoring with Sensu clustering! For even more direction, visit these resources:

- Scaling Sensu Go, with details on moving event storage to Postgres: https://sensu.io/blog/scaling-sensu-go

- Sensu Go docs: https://docs.sensu.io/sensu-go/latest

- Etcd docs: https://etcd.io/docs/latest

- Try Sensu: https://sensu.io/get-started

Check out our webinar for more best practices, including how to set up our HashiCorp Vault integration and how to automate custom scripts with command plugins.